Apple’s famous WWDC25 started yesterday and, as always, it made the news. In theory the “Worldwide Developers Conference” is exactly that - and those don’t usually make the news. Except Apple is a company with a trillion dollars in market cap and it uses the WWDC as a platform to announce and launch new products and services. So yeah, it often makes the news.

Apple unveiled a suite of updates to its multiple operating systems, services and software including the introduction of “Liquid Glass”, a new look that eerily reminds me of my old computer struggling to run Vista’s Aero.

Remember this? I also wish I didn’t.

The consensus across tech, business, and even mainstream press seems to be that the conference was underwhelming, with most left wondering “When will Siri actually become smart?“. I personally use Siri exclusively to set timers and look up currency exchange rates, despite being fully immersed in the Apple ecosystem. ChatGPT gets questions about strategy, technical implementation details or how to make my wife’s flowers look better longer.

Investors are hungry for AI news, but why that should bother Apple, I’m not sure.

Buried under the headlines however, is an update that I believe is quite transcendental when it comes to understanding the future of AI and how we interact with it.

Local models are the future

Apple will be giving developers access to their on-device foundational models. The models themselves are little to write home about - their “server model” is inferior to GPT-4o, a model that recently celebrated its first birthday. The “on-device models” are comparable to Alibaba’s compact Qwen-3-4B and Qwen-2.5-3B. The real step is in getting to access foundational models completely offline, pre-loaded on device across millions of users.

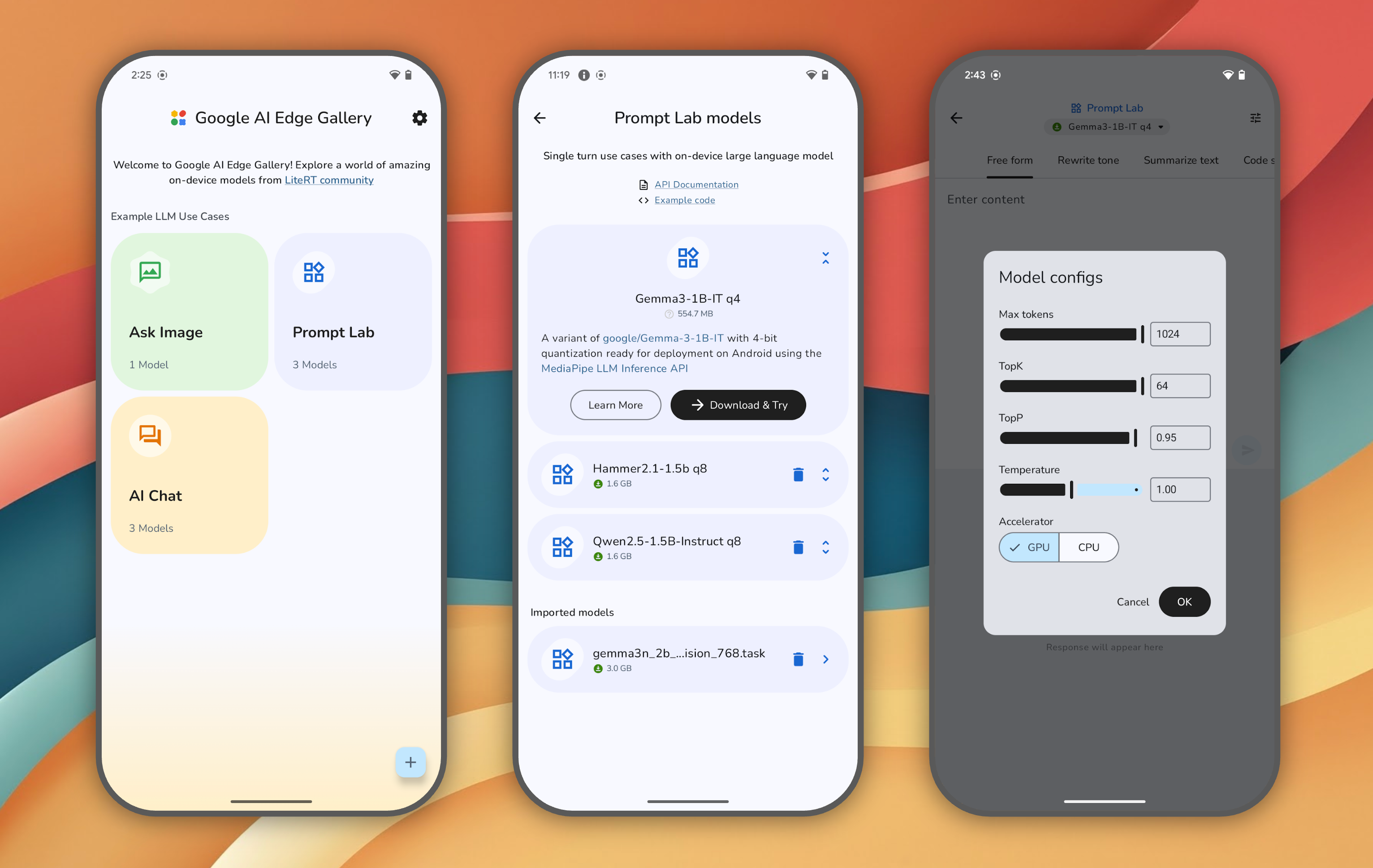

Google had already dipped its toes late last year in the water of local models with Google AI Edge, but it really was the domain of devs exclusively until their quiet launch of its Edge Gallery. What has prompted this change? To quote Simon Willison from his talk on AI Engineer World’s Fair last week is that “the most exciting trend in the last 6 months is that local models are good now”. On-edge models bring a variety of benefits, including reduced latency, increased privacy (I’m not willing to bet Google isn’t logging interactions locally just yet) and most importantly offline possibilities.

Go ahead, download a model and try to jailbreak it.

This doesn’t just extend to foundational models - Hugging face recently released SmolVLA, a vision-based model for robotics that “can run on a MacBook”. This is great news for all of us: models that are compute and size efficient are also energy efficient.

Once again I am reminded of when we first started out KNOWRON. One of the most important and recurring issues we heard from users was the lack of connectivity, which prevented them from reaching our systems and getting the information they needed. Unfortunately factory basements often had pretty spotty reception. While we’re still far away from the convenience of just downloading a .pdf, just downloading the model feels significantly closer.

LLM compute will become a commodity

On May 22nd, OpenAI announced a partnership with the United Arab Emirates as part of the “Stargate” initiative they had announced together with Trump earlier in the year. (Silicon Valley really has to stop naming things after magical objects, the fact that evil aliens bent on the subjugation of humanity came out of the original Stargate is too on the nose for my taste.)

Most headlines focused (rightly) on the political and economic repercussions of the announcement. Of particular interest was the love triangle between Gulf monarchies, the US State Department and AI giants. I recommend reading the UAE and the “OpenAI for Countries” press releases for yourself, it contains quite a few interesting passages (how exactly will models be “localized [for the country’s] culture”?). Once again, a small sentence in the press release is one of the most noteworthy elements to me:

Under the partnership, the UAE will become the first country in the world to enable ChatGPT nationwide—giving people across the country the ability to access OpenAI’s technology.

Sam has a knack for looking dystopian, doesn’t he?

Despite what some LinkedIn influencers will have you believe, this will not turn every single Emirati into a B2B SaaS guru locked into founder mode. It will, however inaugurate in earnest the model that I believe LLMs and AI will hit the general population: ubiquitous access.

The most apt comparison I can find is the advent of mobile internet. The internet was born as a military project in the 60s, the province of scientists and spooks. It later graduated to a larger user base of nerds and shut-ins, who marvelled at the possibility of meeting other people that were really into their niche interests. Today, most new couples meet online and the Chinese government has directed app providers to offer more than 250 million elderly Chinese friendlier versions of their products.

Back in the early 2000s, the introduction of GSM (2G) technology was announced to great fanfare in my home country, Costa Rica. At the time, the government struck a shady deal with French juggernaut Alcatel to provide the infrastructure necessary to bring coverage of a nascent, exciting technology to a small nation with very few internet-capable mobile devices. Comparing Alcatel and OpenAI seems trite, but before the collapse of the dotcom bubble, telecom companies were among the big winners of the frothiest market in living memory, reaching obviously unsustainable valuations.

The bubble, however, did indeed burst shortly thereafter and none of the erstwhile giants reached the same dizzying heights they once did. But there was no putting the genie of the internet back in the bottle. The penetration of mobile internet into the Global South had many leapfrogging technologies completely: people who never even had a landline jumped straight to calling everybody through WhatsApp. The information super highway reached towns that had not even been connected by dirt roads before.

Fast forward to today and choosing a network provider feels as easy (and arbitrary) as picking out a bag of sugar at the supermarket. Sure, you can reach for the organic unrefined sort, but if you just want to bake a cake, well sugar is sugar: you’ll be reasonably well served by just buying the store brand. When I first moved to Germany, I bought an Aldi Talk prepaid line that I only switched from because I was tired of having to go down to Aldi to top the line up (they’ve since made it quite easy to pay through their app).

If you’re just scrolling through this article, I swear this image makes sense if you read the the whole thing.

The same will be true of LLM inference. While a future Claude Opus 6 may reach the highest software developing benchmarks, I won’t need more than the cheapest, store-brand model if I need to ask my smart glasses to tell me whether they think the batter for my cake is fluffy enough.

Inference pricing is going down and models will compete more and more on cost - particulary in a B2B setting, where users will not look Claude or ChatGPT in the face anyway. Go ahead, check if you’re overspending. It’s only a matter of time before I use Check 24 to get a bonus from switching my yearly AI contract from HuggingFace to Groq.

The future (still) belongs to the bold

The coming of the age of AI seems inevitable, in the same way that the internet feels inevitable in retrospect. And like its predecessor, today’s industrial giants, are duelling high above peoples’ heads like Taoist immortals, seeking dominance over each other. Looking back to the history of the internet holds valuable lessons for the bold upstarts (that’s you and I, dear reader) that might reach out and challenge the dominance of established giants. As Howard Zinn said, “History is instructive”.

It was not Alcatel who built Uber - Uber built Uber. The internet became the foundation upon which companies mastering the application layer (Salesforce, Airbnb) and the physical layer (AWS, Oracle) thrived. AI is laying down new foundations: compact offline models and cloud-based foundational models serving tokens for the lowest price possible. What bold and unique ideas will come out on top.

The leviathans are not about to complacently become the next WorldCom. OpenAI recently acquired Jony Ive’s io for a whopping $6.5B and bought Silicon-Valley based Windsurf for $3B last month. Both Windsurf and Cursor, extremely popular VS Studio Code forks, allow you to switch between models ad hoc already - they’re some of the most successful businesses where AI is truly at its core, not only supplementing an existing business model.

On the physical space, Meta has been integrating their models into their glasses: I’m even more of a believer in them now after seeing them being worn at a birthday party last week. Apple, king of physical devices, is betting on both of the trends I outlined in this article. The time is not yet there for the ubiquity of AI, so for the time being they’re pondering transparencies and corner radiuses.

Seriously, stop freaking out about Liquid Glass

Like the internet before it, having AI saturating the air, invisible but inescapable, will open innumerable possibilities. Humanity will have in its hands a true Stargate - it’s up to us to see what technological marvels or eldritch horrors we bring through it.